|

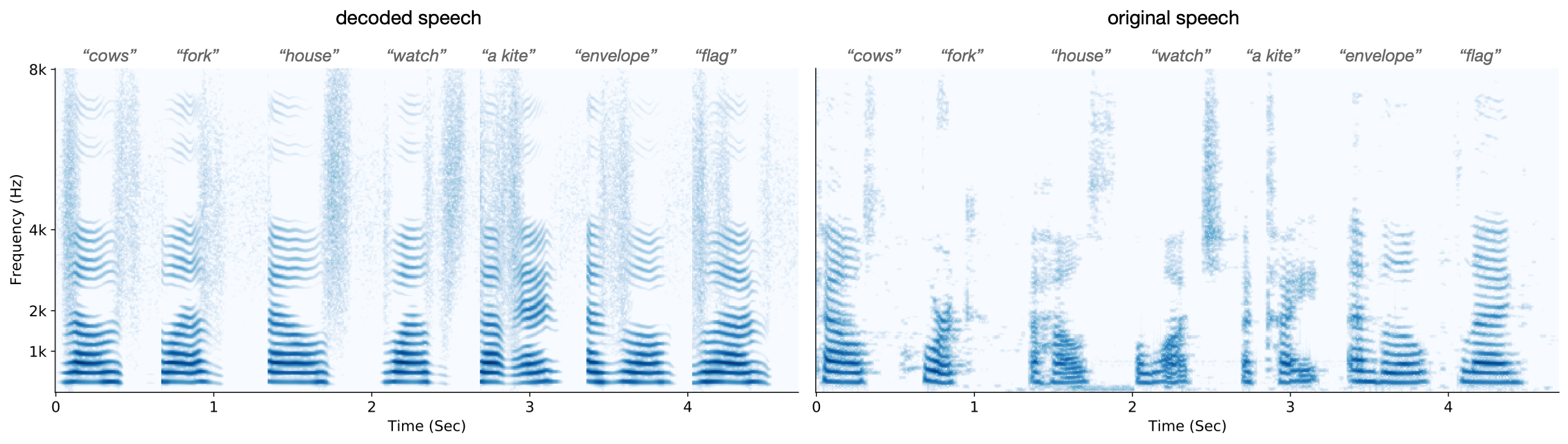

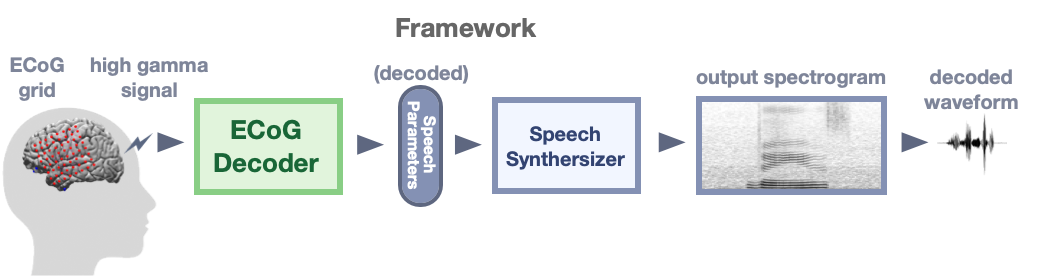

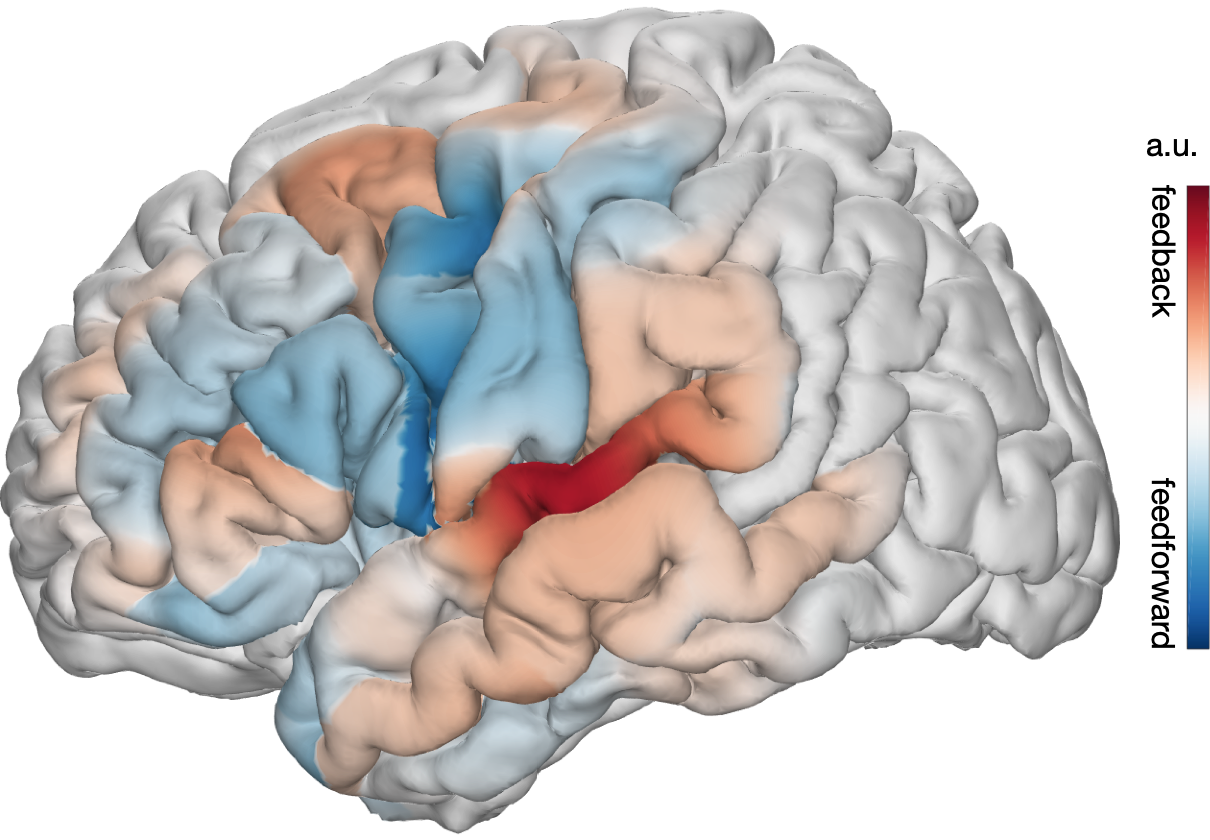

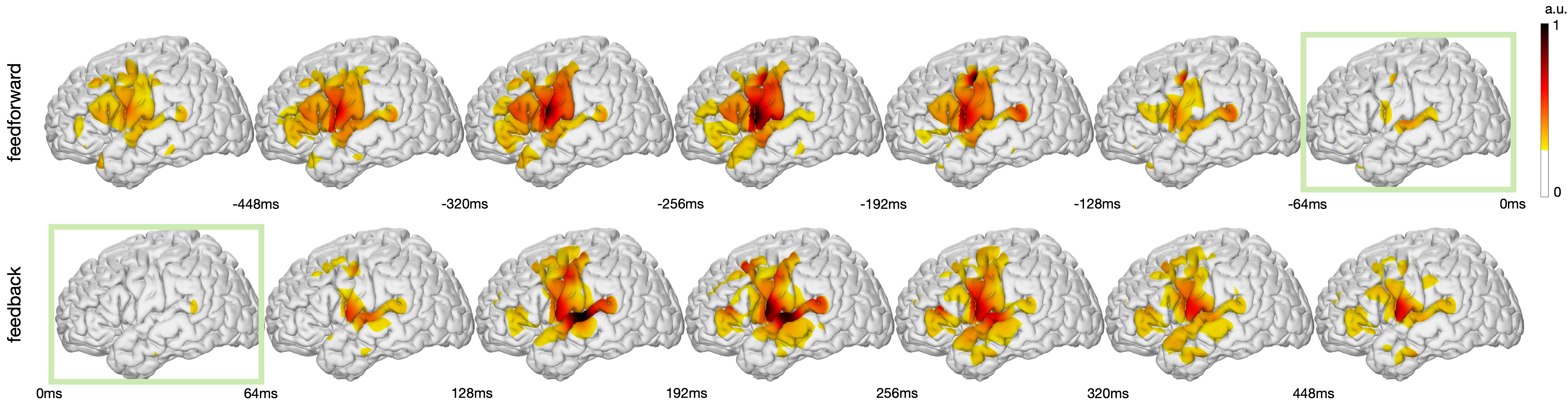

Decoding spoken speech from human neural activity can enable neuro-prosthetics. Advances in speech neural decoding have shown successful achievements using deep learning. The scarcity of training data, however, hinders a complete brain-computer-interface (BCI) application. In this work, we propose a novel speech neural decoding framework to map the neural signals to either stimulus (perceived) speech or produced speech during various language tasks. We tease apart the feedforward and feedback speech control in neural cortex during network design. Our proposed model can produce natural speech with quantitative metrics substantially exceeding our previous work as well as the state-of-the-art. Furthermore, we evaluate the contribution of each cortical region to the decoding performance. We reveal the feedforward-feedback contributions for decoding the produced speech, as well as the feedback contributions for decoding the perceived speech. Our analysis provides evidence of a mixed cortical organization where pre-frontal regions, classically believed to be involved in motor planning and execution were engaged in both predicting future motor actions (feedforward) as well as processing the perceived feedback from speech (feedback). Similarly, portions of the superior temporal gyrus which is classically involved in speech perception showed evidence of anterior regions which were predicting future speech (feedforward) and more posterior regions that were processing reafferent speech feedback. Our findings are the first to systematically disentangle the dynamics of feed forward and feedback processing during speech and provide evidence for a surprisingly mixed cortical architecture within temporal and frontal cortices. Our approach provides a promising new avenue for using deep neural networks in neuroscience studies of complex dynamic behaviors. |